The News: The White House recently published the U.S. AI Bill of Rights guidelines clearly hoping to set the tone for future artificial intelligence (AI) developments and risk management. Read the full Blueprint for an AI Bill of Rights here.

The U.S. Government Has Published a Blueprint for AI Bill of Rights

Analyst Take: The U.S. government, through the White House Office of Science and Technology Policy (OSTP), recently released a blueprint that it calls the Blueprint for an AI Bill of Rights, designed to take the first steps in regulating AI in the U.S. and taking a first step toward regulation in the U.S.

The U.S. government is in early stages here, only now beginning to think about the potential risks posed by artificial intelligence and how to mitigate harm to American citizens in terms of data and privacy, surveillance, as well as the risk of bias that AI inherently poses.

As is normally the case, the U.S. is somewhat behind others on this front. The European Union’s EU AI Act isn’t yet finalized, but is in development, and is largely expected to be enacted into law sometime in 2023. In its National AI Strategy, the UK government outlined its proposal, a collaborative approach that’s been dubbed a “pro-innovation approach to regulating AI” in mid-July of this year. Given the well-known challenges the UK has with technology and a somewhat lackluster reputation for the ability to drive innovation, it’s not surprising to see their efforts here differ somewhat. The UK’s stated goal is spurring innovation, promoting investment, driving productivity, and boosting the public trust in AI technology, and the UK is taking a less centralized approach than the EU overall, which some say might prove challenging.

The U.S. Government AI Bill of Rights

The blueprint published by the U.S. Government is not a law, but instead a “call to action” containing guidelines on how data should be protected, thoughts on how to mitigate risk of bias, and minimize surveillance, and reduce harm overall that might ultimately be caused by AI. Here’s a deeper look at the focus of this AI Bill of Rights thus far:

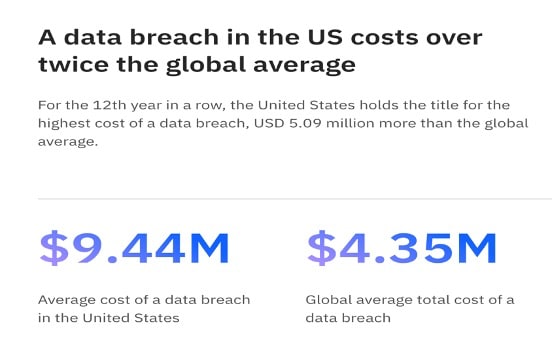

Data risks. Data breaches are pretty much a given for today’s organizations. And today, those data breaches are expensive. According to IBM’s 2022 Cost of a Data Breach, a data breach in the U.S. costs over twice the global average.

While AI can help create safeguards and is an integral part of many cybersecurity protections offered by technology vendors, threat actors can also use AI for more sophisticated attacks.

Bias. Bias has always been an inherent problem as it relates to artificial intelligence. If the data used to train AI models is biased, of course the results will be biased as well. The U.S. Equal Employment Opportunity Commission has already sued more than a dozen companies for using AI in hiring that had a disparate impact on certain protected groups.

Loss of control and trust. As AI gets better at making decisions, humans will increasingly cede control to machines. But if something goes wrong, it will be hard to understand why the machine made the decisions it did, which could lead to a loss of trust in AI.

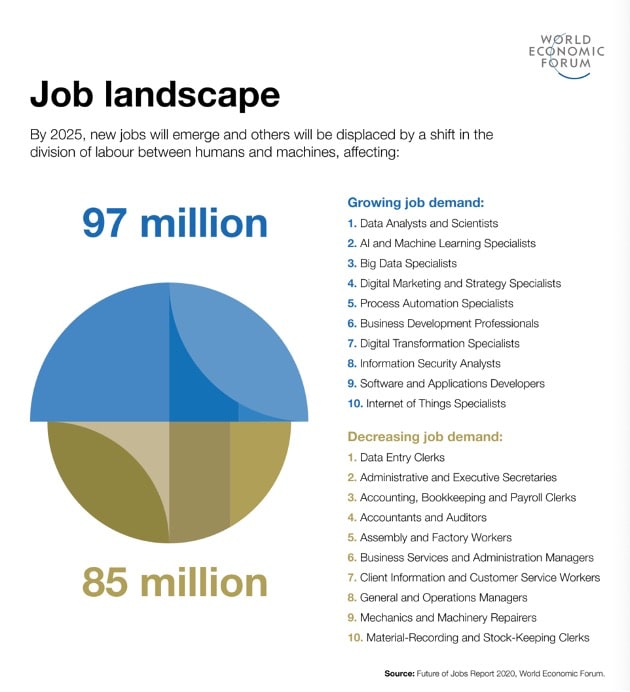

Unemployment. Concerns of artificial intelligence disrupting the workforce and “stealing” jobs has long been viewed as problematic for many. That said, we believe that AI will create more jobs than it destroys, and further, that AI will play a significant role in making work for many more enjoyable and effective, letting technology do the heavy lifting for its human counterparts, freeing them to do more important work and drive business benefits. In its Future of Jobs Report 2020 World Economic Foundation, the WEF estimated that by 2025 while there will be a decreasing job demand for 85 million job roles, there will be a growing job demand for 97 million job roles. The global Covid-19 pandemic accelerated the use of AI in many instances, speeding up the digital transformation efforts for many organizations, and benefitting consumers in myriad ways.

The Focus of the Blueprint for the AI Bill of Rights

The focus of the AI Bill of Rights is on protecting people’s freedoms and benefits by emboldening these five core principles:

- Safe and effective systems

- Protection from discrimination

- Human alternatives

- Consideration and fallback

- Data protection

- Notice and explanation

The Severe Limitations of the Blueprint for the AI Bill of Rights

The White House hopes that its Blueprint for the AI Bill of Rights will inspire both the public and private sectors, a.k.a. tech companies, in developing and using AI powered technology.

Currently, however, the blueprint is a perfect example of something that’s paved with good intentions but doesn’t have a bite. First of all, it is not really a law. It’s more like a set of guiding principles, which are good but voluntary. In fact, it doesn’t impose any penalty or punishment on any entity that “violates” it.

This Blueprint is not a set of rules or regulations, it is a framework and ultimately suggestions. The U.S. government has no real power to enforce these suggestions. And even if it did, it would be going against the very nature of AI, which is to automate and do things faster and better than humans. Second, it doesn’t speak about the clearest option to prevent harm, which is to ban the use and deployment of the technology. For example, the EU has banned the use of facial recognition in public spaces for five years. This is a blunt but effective way to prevent its misuse. On the other hand, the U.S. government has only asked companies to be “transparent” about their use of the technology. It is hard to write that without laughing a little because, well, organizations and transparency often don’t blend.

Conclusion

The U.S. government’s AI bill of rights is well-intentioned. It’s a good first step in the right direction. But without more specifics and enforcement mechanisms, it will not likely have much impact. The EU’s approach of banning certain technology uses is a more effective way to protect people’s right, but it also stifles innovation, which is also problematic. The best way forward would be for the U.S. government to develop a more specific and enforceable law that balances protecting individual rights and promoting innovation.

Disclosure: Fatty Fish is a research and advisory firm that engages or has engaged in research, analysis, and advisory services with many technology companies, including those mentioned in this article. The author does not hold any equity positions with any company mentioned in this article.

-

Shelly Kramerhttps://staging-fattyfish.kinsta.cloud/author/shelly-kramer/January 25, 2023

-

Shelly Kramerhttps://staging-fattyfish.kinsta.cloud/author/shelly-kramer/December 1, 2022

-

Shelly Kramerhttps://staging-fattyfish.kinsta.cloud/author/shelly-kramer/July 16, 2021

-

Shelly Kramerhttps://staging-fattyfish.kinsta.cloud/author/shelly-kramer/March 19, 2021